Subscribe to Our Newsletter

Sign up for our bi-weekly newsletter to learn about computer vision trends and insights.

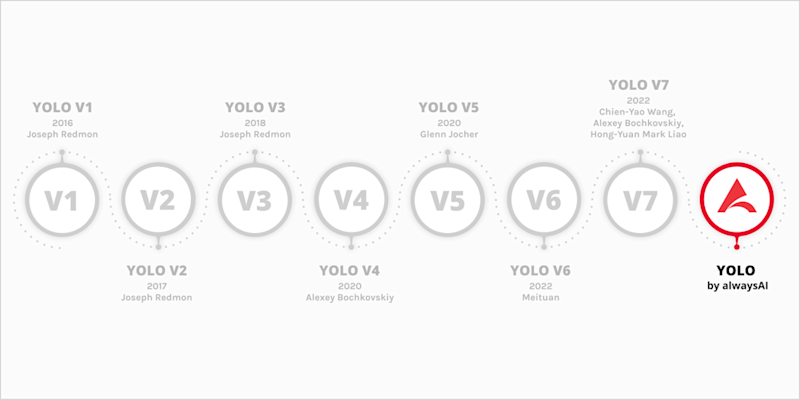

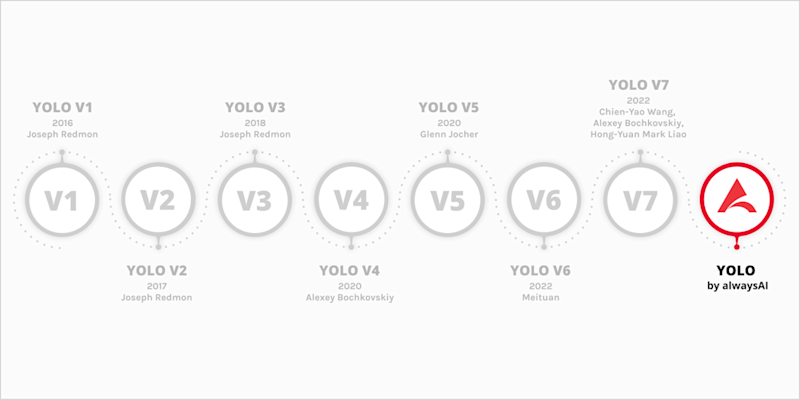

What YOLO Model Architecture Is Best for You?

by

Kathleen Siddell

Subscribe to Our Newsletter

Sign up for our bi-weekly newsletter to learn about computer vision trends and insights.

Kathleen Siddell